Navigating the Regulatory Landscape for AI Safety: Balancing Innovation and Control

A balanced examination of governance approaches to keeping artificial intelligence models aligned with human values and safety standards.

Introduction

As artificial intelligence systems grow increasingly sophisticated and pervasive across global society, the question of how to ensure these powerful tools remain aligned with human values has become paramount. Recent developments in foundation models—large AI systems trained on vast datasets that serve as the basis for numerous applications—have accelerated this conversation, bringing unprecedented capabilities alongside novel risks. This analysis examines the evolving governance landscape for AI safety, assessing various regulatory approaches and their implications for innovation, global cooperation, and long-term technological development.

The Current Regulatory Environment

The governance of AI has evolved from theoretical discussions to practical implementation in remarkably short order. Major jurisdictions have taken divergent but increasingly concrete approaches:

European Union: The EU AI Act represents the most comprehensive regulatory framework to date, establishing a risk-based approach that imposes stricter requirements on systems deemed high-risk. Foundation models face specific transparency and safety testing obligations, reflecting the EU’s precautionary stance.

United Kingdom: Post-Brexit Britain has pursued a more innovation-friendly approach, emphasising voluntary commitments and industry-led standards whilst maintaining regulatory oversight through existing authorities and the newly established AI Safety Institute.

United States: America’s approach has balanced market-driven innovation with targeted interventions through executive orders requiring safety testing for advanced models and establishing reporting requirements for significant AI risks.

China: The Chinese government has implemented regulations focused on algorithmic transparency and content controls, whilst simultaneously investing heavily in domestic AI development to achieve technological self-sufficiency.

This regulatory patchwork reflects differing cultural attitudes toward technological risk, market intervention, and the proper role of government in technological development.

Competing Philosophical Approaches

The debate around AI governance fundamentally involves reconciling competing values and priorities:

Safety-First Perspective

Proponents of stronger regulatory frameworks argue that AI systems represent unprecedented technological power that could pose existential threats if misaligned with human values. This perspective emphasises:

• Precautionary principle: Given uncertain but potentially severe risks, constraints should be imposed until safety can be demonstrated.

• Democratic oversight: Technical decisions with broad societal implications require democratic legitimacy rather than being left to technologists alone.

• Mandatory standards: Voluntary commitments from technology companies are insufficient when market incentives for rapid deployment remain strong.

Innovation-Centric Perspective

Those advocating for lighter regulatory approaches emphasise:

• Technological progress: Overly restrictive regulations could hamper beneficial innovations that might address pressing global challenges.

• International competition: Excessive constraints in democratic nations may cede leadership to authoritarian regimes with different values.

• Practical limitations: Effective regulation of rapidly evolving technology requires technical understanding that governments often lack.

• Adaptable governance: Flexible standards and industry engagement may prove more effective than rigid regulatory frameworks.

Emerging Consensus Points

Despite these philosophical differences, several elements of consensus have emerged amongst thoughtful observers across the political spectrum:

1. Risk-based approaches: Regulatory intensity should be proportionate to the potential risks of different AI applications.

2. Technical standards: Developing robust, internationally recognised standards for safety evaluation is crucial.

3. Transparency requirements: Model developers should document capabilities, limitations, and safety measures.

4. Independent evaluation: Third-party assessment of powerful systems provides necessary objectivity.

5. International coordination: Preventing regulatory arbitrage requires harmonisation of basic safety standards across jurisdictions.

Addressing Key Challenges

Technical Evaluation

Determining whether AI systems meet safety standards presents significant technical challenges. Current approaches include:

• Red-teaming exercises: Systematic attempts to identify harmful capabilities or behaviours.

• Formal verification: Mathematical techniques to prove certain properties of systems.

• Interpretability research: Developing methods to understand AI decision-making processes.

• Benchmark testing: Standardised evaluations against recognised metrics.

None of these approaches alone provides comprehensive safety assurance, suggesting the need for layered evaluation systems and continuous monitoring.

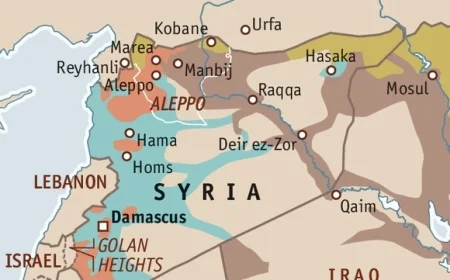

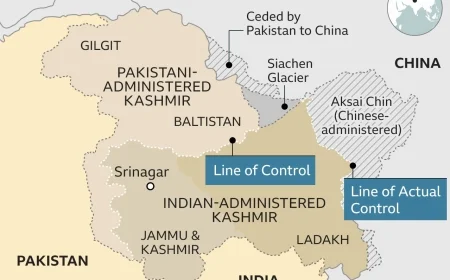

Global Coordination

The fundamentally international nature of AI development requires coordination mechanisms that respect national sovereignty whilst establishing common principles. Promising approaches include:

• International standards organisations: Bodies like ISO can develop technical standards with broad legitimacy.

• Multi-stakeholder governance: Including industry, civil society, and academic experts alongside governments.

• Bilateral agreements: Targeted cooperation between major AI-producing nations.

• Open-source collaboration: Shared safety tools and evaluation methodologies.

The lack of effective international institutions for technology governance remains a significant obstacle, with existing bodies like the UN lacking technical capability whilst more technically adept organisations often lack legitimacy.

Balancing Openness and Security

A particularly thorny challenge involves determining appropriate levels of openness for advanced AI systems:

Benefits of openness:

• Enables wider scrutiny and safety research

• Democratises access to powerful technologies

• Facilitates academic progress and talent development

• May prevent dangerous concentration of power

Risks of openness:

• Lowers barriers for malicious actors

• May accelerate unsafe capabilities

• Complicates coordination on safety standards

• Could trigger unproductive racing dynamics

This tension has no simple resolution and likely requires nuanced approaches that distinguish between different types of openness (e.g., model weights, training methodologies, safety techniques) and different contexts.

Pragmatic Path Forward

Effective governance of advanced AI systems will likely require a combination of approaches tailored to different contexts and levels of risk:

1. Graduated oversight: Lightweight processes for most AI applications, with increasingly stringent requirements for more powerful systems.

2. Adaptive regulation: Building feedback mechanisms that allow governance to evolve alongside the technology.

3. Distributed responsibility: Safety assurance distributed across developers, deployers, third-party evaluators, and regulators.

4. Technical foundations: Investing in safety research and evaluation methodologies to enable effective oversight.

5. International dialogue: Building shared understanding of risks and appropriate governance mechanisms across major AI-producing nations.

6. Public engagement: Ensuring broader societal values inform governance approaches through meaningful public consultation.

Conclusion

The governance of increasingly capable AI systems represents one of the most consequential policy challenges of our time. Neither unconstrained technological development nor heavy-handed regulatory approaches alone will likely prove adequate.

The most promising path forward involves creating governance systems that can evolve alongside the technology, combining technical rigour with democratic legitimacy. This requires sustained engagement between technical experts, policymakers, and the broader public to develop approaches that simultaneously foster beneficial innovation while establishing meaningful guardrails against misuse or unintended consequences.

As foundation models continue to advance in capabilities, finding this balance becomes not merely desirable but essential to ensuring that artificial intelligence enhances rather than undermines human flourishing and autonomy.

Published by The Institute for Global ProgressApril 2025

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0